Psychology Classics On Amazon

Understanding Anxiety in Children

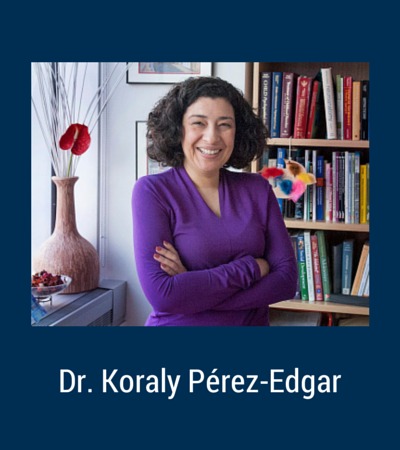

(Researcher Looks To Eye-Tracking Device To Better Understand Anxiety in Children)

Want To Study Psychology?

The following article by Lauren Ingram was originally published on PENN STATE NEWS

Koraly Perez-Edgar, a researcher in Penn State’s Department of Psychology, takes a remote-operated toy spider from a tall bookcase in her office, turns it on and places it on the linoleum floor. It’s gangly, about the size of a shoebox, with eight fuzzy black legs, beady eyes and plastic fangs. With the switch of a button, the arachnid on wheels zooms forward and out of the room.

For some youngsters, the spider is frightening. For others, it’s simply a funny-looking plaything. Why some kids squeal with delight and others with trepidation is what Perez-Edgar is hoping to tap into.

|

Perez-Edgar, who uses the toy as a prop in research studies aimed at identifying babies and children who might be at risk for developing anxiety disorders. To do this, her research team is working with Pupil Labs, a German technology firm, to build an eye-tracking visor for an upcoming pilot study. The device will capture eye gaze information that could lead to a better understanding of anxiety disorders in children and ultimately help parents, teachers and doctors more effectively predict, recognize and diagnose such conditions. |

|

Eye tracking — the process of watching where a person is looking — is an ideal technique for what Perez-Edgar wants to accomplish.

"Because such information as gaze direction and gaze point are tied to the brain and learning, the eye-tracking device will help us understand exactly how a baby or child is experiencing his or her environment and how they react to threat stimuli," Perez-Edgar said. "The notion is that kids who are highly reactive to sensory stimuli are at an increased risk for anxiety disorders. So, these eye-tracking clues, when coupled with other behavioral, genetic and biological information we know about the child, can help us begin to understand behavior patterns as early as within the first few months of life,” she added.

Up until now, it’s been difficult for scientists to do these kinds of studies with babies and children. Though proprietary mobile eye-tracking models with machine-learning capabilities exist, they often cost upwards of $30,000 and are used primarily by marketing and consumer scientists, making them difficult to customize and cost prohibitive for research studies. So, Perez-Edgar and her team are creating an eye-tracking visor that is wearable, less expensive and mobile.

The initial idea for using a mobile visor came from Phil Galinsky, a do-it-yourself tech enthusiast and research technologist in the College of the Liberal Arts, who built a DIY version of the device and has recently been working on adding face detection and emotion recognition capabilities to the software.

According to Galinsky, eye tracking can provide answers to important questions about where participants are looking, how long they are looking, who or what they are ignoring, when they blink and how their pupils are reacting to various stimuli. Because this visor lets participants move around freely, it opens up even more opportunities for studying how children react to stimuli in the real world. Plus, with babies and young children who can't talk or express their emotions verbally, mobile eye tracking offers a more accurate gauge of how they're feeling.

The technology works by using a camera and a light source to measure eye movements. While an infrared light directed at the eye reflects information about eye activity, the camera simultaneously uses optical sensors to track and gather data like gaze direction and pupil position — minute clues that can yield major insights into the inner workings of the mind.

The visor, which is connected to a wireless tablet computer worn in a backpack by the child, sends this eye-tracking information to a computer. Perez-Edgar and Galinsky are then able to view live video footage of what the child is seeing. The system also calibrates the exact point of the child’s gaze, which appears as a red dot superimposed over the video feed.

"This red dot is so important because it tells us whether the child was fixating on a perceived threat, what specifically that threat was — whether it was a toy in the room or a feature on a person’s face — and for how long,” Perez-Edgar said. “We’re going to use the visor to capture extreme reactions that could be early indicators of anxiety disorders.”

In addition to her anxiety studies, Perez-Edgar is also planning to use the eye-tracking device in a Center for Online Innovation in Learning-funded Research Initiation Grant in collaboration with Marcela Borge and Heather Toomey Zimmerman that will study how children interact in learning situations. Perez-Edgar also sees potential for the technology to be used to investigate how children with autism perceive faces and make eye contact.

Because of the visor’s adaptability to other disciplines, contributing to the open-source community was important to the researchers.

"While we're going to be using the eye-tracking visor for a range of behavior studies at Penn State, we also want to share our additions to the system with the wider research community,” Galinsky said. "In the spirit of science, we’re making the code open source to help provide access to technology that is otherwise expensive and not customizable.”

Perez-Edgar is excited for what she’ll learn about anxiety disorders with the start of the pilot in the next couple of months.

“Every day, we see children who view their environments as threatening and understandably begin to withdraw and fear the world. They don’t want to interact with people or go out — even a play date with a child they don’t know might trigger a threat response,” Perez-Edgar said. “Mobile eye tracking will enable us to get participants out of the lab so we can fine-tune our understanding of their thought processes and how they actually live their lives,” she added. “This will hopefully open up a whole new area of research.”

Recent Articles

-

Psychology Book Marketing

Apr 28, 25 03:09 AM

Psychology book marketing. Ignite your book's visibility by leveraging the massive reach of the All About Psychology website and social media channels. -

All About Psychology

Apr 28, 25 03:01 AM

A psychology website designed to help anybody looking for detailed information and resources. -

Psychological Torture: What It Is, How It Works, and Its Human Cost

Apr 27, 25 07:11 AM

Explore the science, ethics, and long-term mental effects of psychological torture—insightful, evidence-based, and deeply human.

New! Comments

Have your say about what you just read! Leave me a comment in the box below.Go To The Child Psychology Page

New! Comments

Have your say about what you just read! Leave me a comment in the box below.